Written By Nathan Bennett

Tuesday, VMware announced a vSphere with built in Kubernetes solution. This allows their hypervisor access to Kubernetes clusters with built in operations to run and manage those solutions. They are making this change for a good reason. Businesses want their applications to run better, and the term “cloud-native” is right in the center of it.

Last year was the first time that IT saw businesses spend more money on a line of business solutions than on IT operations or Infrastructure. What this seems to imply, is that the budget of IT is moving from the machines running in datacenters, to development of applications that are making the company money. This means that there is a shift in thinking for companies around the nation. They are realizing their monolithic and slow applications need to run faster, with a lighter load, and with better availability. Pat Gelsinger CEO of VMware even posted in a tweet last week, that the idea of “Slow and steady wins the race” that has been prevalent in business, is almost non-existent now. Speed is key. The well known quote from Mark Zuckerberg of, “Go fast and break things” has changed the face of development lifecycle.

There is also another side, application developers often are in an environment that is not friendly to them and requests for resources or access take far too long. This can lead to fruitless arguments that can go so many different ways. The Ops guy can say, “If the app ran better I wouldn’t be spending all my time and budget on better hardware.” The Dev guy can say, “If the Ops guy would just give me what I want I can tweak things so they run better, and take that back to my code-base.” and the story goes on and on.

Cloud-Native

So, what does Cloud-Native actually mean? It is the solution for an application to run utilizing something called microservices to remove the operating system from the equation and can be run in many different environments. Microservice is breaking down your application to smaller bits. This allows you to not have to build the whole app as a web-front end database solution with api hooks in multiple locations, but to break down each segment like a website front end solution, the database behind it, and the different service solutions to “hook” into other services.

This is where Kubernetes comes in as the buzzword of the past year or two as well as VMware’s solution known as Project Pacific.

Project Pacific – vSphere 7 and Kubernetes

Project pacific (Now called vSphere on Kuberenetes) leverages built in services in vSphere 7.0 to allow you to run Kubernetes on your hypervisor. This alone is very underwhelming, but just like a modern cloud-native application, it’s not the home that’s important, but the group of solutions that comes with it. For instance, Pacific utilizes NSX to allow load balancing for your pods (group of microservices). This allows highly available applications, all of which are within your private cloud environment, and you do not have to look into moving to a public cloud. For me this was a big point. The idea of running a cluster in the public cloud is great and fun, but at the end of the day its connected to a billing cycle, so it brings the developers to the solution, but then when someone hears of it, then the higher ups get them out of it.* This many times will result to a developer trying to run Kubernetes locally on his machine with Minikube or Docker desktop, which are great solutions to attempt, but they don’t really reflect an enterprise environment.

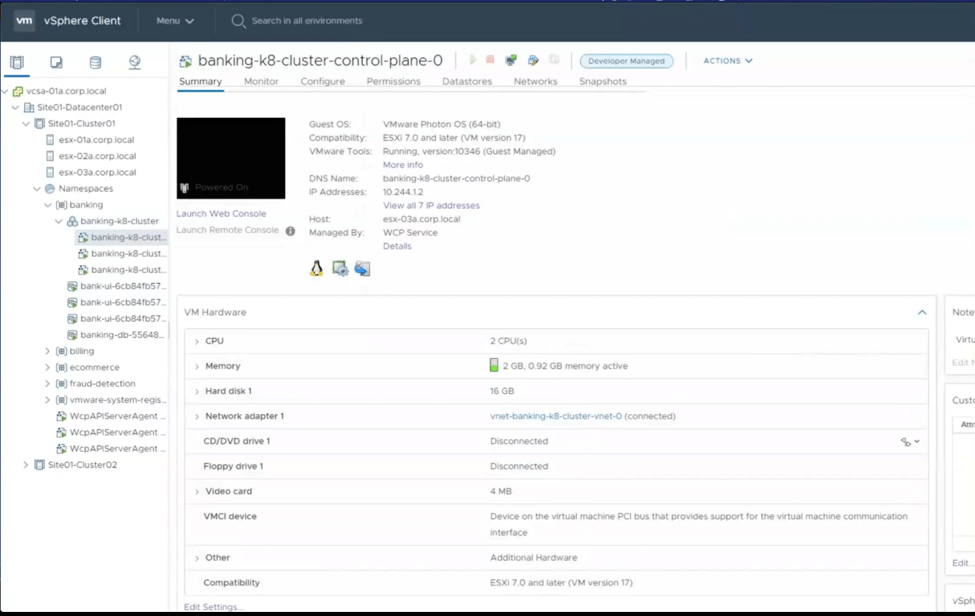

Pacific’s goal is to allow an Operations user to create a cluster for Kubernetes (set of servers), so the developer can access, utilize, and run their microservices. The operator will first deploy the solution with VCF on their vSphere hosts, then run another automation piece that sets up the needed NSX settings, and finally run the configuration to build the workload cluster that controls the deploying of their clusters. Once this is done the Operator will build clusters in specific namespaces and grant access to that namespace so that developers can login to clusters and build/manage/run their pods. This isn’t just ops being a deploy button to spit out clusters (Although I’m sure some devs would be very happy for that), but the operations user has the ability to view the clusters, as well as the pods.

This graphic shows 4 or 5 different parts. The vCenter, the Hosts, the VMs, the clusters, and the pods. This is a great example of how the developer can be free in the cluster, but the operator still has that hierarchical viewpoint to be able to help the developer when things break and put up the barriers if the pods start going past their needed solution.

Operational Updates

Along with this huge update and feature set being released to the consumer base, VMware is also updating a number of features in its infrastructure to help Operations setup this solution, and VMware Cloud Foundations will be utilized to deploy this solution.

VMware Cloud Foundations has been a great solution to deploy management and workload clusters on the hypervisor to allow operations to easily utilize, update, and manage their environment on premises. With this new update VMware Cloud Foundations will deploy the needed software defined networking through NSX-T and also their software defined storage through vSAN. As I mentioned above, NSX-T will be the necessary for the routing and networking utilized by your Kubernetes clusters, and pods. vSAN is a software defined storage solution that will be utilized for Kubernetes persistent volumes, allowing the pods to connect directly to a storage policy and consume storage directly instead of a virtual machine playing the middleman. vSAN also gets some friendly updates for Operators including NFS connection. This will let you connect to the vSAN solution and utilize it for file storage. This new feature for vSAN 7.0 is releasing at the same time as Kubernetes on vSphere.

Additionally, vSAN comes with a host of other solutions in vSphere that I’ve placed in another blog. Every addition is set to take time off the task for operators when trying to perform tasks. In the vRealize Suite of products there are major updates that will be discussed on another post in the future on the updates to create your operation automation more extensive from Operations to Developers, and your optimization, billing, and monitoring through vRealize Operations.

Conclusion

With the speed of business priority rising above the charge of operational and capacity costs, we now have to adjust our implemented products to fit that challenge. VMware’s group of announcements today is poised to set in motion speed of development and operations for its customers. From the adjustment of applications to a “Cloud-Native” architecture, to simplifying the day to day actions of operators and engineers utilizing automation, monitoring, and reporting, these products will fit right into the “Speed is Key” mantra we see today. The change in direction has been in the works for businesses for the past couple years, and some are ahead of the game. For those that have not found a solution to create this speed, VMware has stepped up to bring the solution to their customers. If you are interested in learning more, take a look at the announcements coming out and see what is a good fit for your environment. I strongly suggest to look at the vRealize Suite of solutions for your existing environment, and if you’re building a new datacenter out, building a new disaster recovery site, or are just getting started setting up your private cloud, VMware Cloud Foundations is a solid choice. Time will tell the success of these products, but the adoption of speed will fill this need for businesses for years to come.