written by Billy Downing

What is Tanzu Kubernetes Grid: Architecture?

Tanzu Kubernetes Grid (TKG) is an implementation of several open-source projects to provide automated provisioning and lifecycle management of Kubernetes clusters.

These include:

- ClusterAPI

- Calico CNI (as well as exploring Antrea at the time of writing)

- kubeadm

- vSphere CSI

- etcd

- coreDNS

- vSphere Cloud Provider (coupled with a TKG CLI/UI for ease of use)

In this scenario, we are going to deploy a TKG environment to vSphere 6.7u3. TKG is deployed on top of the underlying infrastructure provider as a virtual machine, therefore, we are allowed to deploy TKG to several environments. This includes vSphere 7 with Kubernetes, vSphere 6.7, and AWS, for example. This provides consumers the ability to easily deploy and manage VMware supported, upstream conformant, Kubernetes clusters in their existing vSphere on-prem environment. NSX-T or VMware Cloud Foundation is not necessary at this point.

Figure 1: ClusterAPI Logo, source: https://cluster-api.sigs.k8s.io/

What is TKG when compared to vSphere 7 with Kubernetes?

A common question addressed in forums and blogs is the difference between vSphere 7 with Kubernetes and Tanzu Kubernetes Grid.

To begin with, Tanzu is VMware’s Modern Application Product Portfolio. Several products exist within the build-out of the ‘Build, Run, Manage’ mantra.

vSphere 7 with Kubernetes

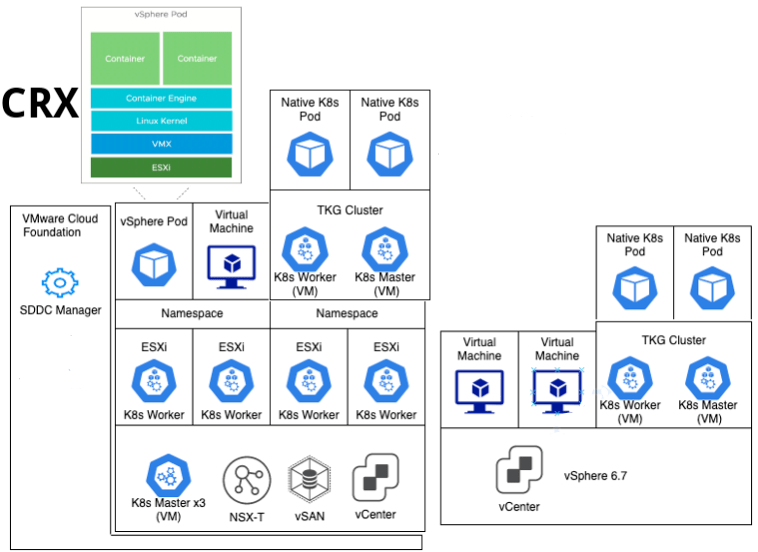

- vSphere 7 with Kubernetes is the deployment of container workloads directly on ESXi hosts, managed by Virtual Machine K8s control nodes. The architecture is built on top of the VMware Cloud Foundation (to aid in the deployment of all necessary components at the time of this writing), as shown in Figure 2. To run Linux containers and manage ESXi hosts via Kubernetes to schedule workloads VMware had to rewrite some native Linux packages to run in ESXi. The main two components are Spherelet and CRX. Installing these packages to an ESXi cluster and enabling Kubernetes in vSphere 7 creates a ‘Supervisor Cluster’ (a cluster that supports Kubernetes workloads, or Vsphere pods, and virtual machines side-by-side).

- Spherelet – This is the component that exists within the ESXi hosts to communicate with the Kubernetes control nodes, replacing the kubelet.

- CRX is the container runtime deployed in ESXi to facilitate container workloads.

Container Runtime for ESXi (CRX) is broken down in Figure 2 and shows the three tiers that make it function. Since ESXi is not Linux, VMware created a ‘shim’ virtual machine layer to present the Linux kernel to containers without the bloat of a typical virtual machine.

More on this can be found here: https://blogs.vmware.com/vsphere/2020/04/vsphere-7-vsphere-pod-service.html

Tanzu Kubernetes Grid

As mentioned above, TKG is a collection of open-source projects bundled together by VMware in a single deployment. VMware also verifies the compatibility of versions, as well as some security audits which relieves the user from having to perform the same functions on their own. This guarantees the cluster deployed is built on best practices and supported by VMware. In this scenario, we highlight the usage of ClusterAPI for comparison. ClusterAPI is a project designed to use Kubernetes to manage Kubernetes.

For example, several custom resource definitions are created within a ClusterAPI enabled Kubernetes cluster to extend the API. For example, the creation of ‘Machine’ objects. Much like how Kubernetes life cycles and manages pods, deployments, and services, ClusterAPI enables a cluster to manage other Kubernetes Clusters as objects made up of ‘Machines.’ This means that to deploy a TKG guest (or workload) cluster you must first deploy a TKG management cluster.

This is where some confusion sets in. Within a vSphere 7 with Kubernetes deployment, the Supervisor Cluster created during install, acts as the management cluster for deploying and managing TKG guest clusters. Therefore, after enabling vSphere 7 with Kubernetes you can immediately be creating and managing TKG guest clusters across namespaces. Conversely, when deploying TKG clusters to vSphere 6.7, the original Supervisor cluster does not exist so there needs to be an automated way to deploy the original management cluster from which to spawn guest clusters (hence the ‘turtles down’ logo for ClusterAPI). The process of how to solve this problem is explained in the deployment process section below.

Summarize the Differences: It lies in where the container runtime exists

- vSphere 7 with Kubernetes deploys the container runtime directly on the bare metal ESXi hosts whereas the TKG cluster deploys the container runtime within the virtual worker nodes created.

- vSphere 7 with Kubernetes is not completely K8s upstream conformant, therefore limitations exist in deploying extensions, add-ons, and so forth, whereas TKG deploys completely upstream conformant Kubernetes clusters that you have full control over.

Use-Case: When to use TKG and when to use vSphere 7 with Kubernetes

vSphere 7 with Kubernetes:

- Use when essential features of container management are required. (Kubernetes Native objects)

- Use when running containers on bare metal is a requirement

Tanzu Kubernetes Grid

- Use when full control over the control nodes are required

- Use when fully upstream conformant Kubernetes is required

- Use when cluster customization is needed (extensions, add-ons, etc.)

Reminder: These tools are not mutually exclusive. TKG can run on top of vSphere 7 with Kubernetes to provide the best of both worlds, and each solution can run independently

Figure 2 – Overview Architecture of vSphere 7 with Kubernetes versus vSphere 6.7 with TKG Clusters

Deployment Process:

In this scenario, we are going to deploy TKG onto an existing vSphere 6.7u3 environment. This, for a lot of users, will provide the fastest path to implementation of Tanzu Kubernetes Grid managed clusters. In this way, we can automate the deployment of Kubernetes clusters without needing vSphere 7 with Kubernetes, NSX-T, or anything else. This will deploy self-containing, upstream conformant Kubernetes clusters that we can manipulate however needed.

First Step:

- Gather Tools needed

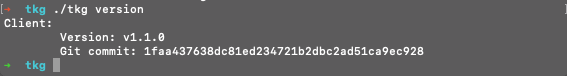

TKG CLI Binary

Figure 3 – Example TKG Version output

- Kubectl Binary

- Docker Desktop

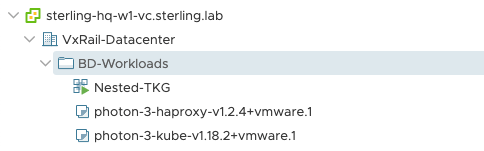

- OVAs/Templates for TKG worker/control nodes and load balancer

Figure 4 – Example Templates needed to deploy TKG clusters (VMware Provided)

Figure 4 – Example Templates needed to deploy TKG clusters (VMware Provided)

Second Step:

- Deploy Management Cluster

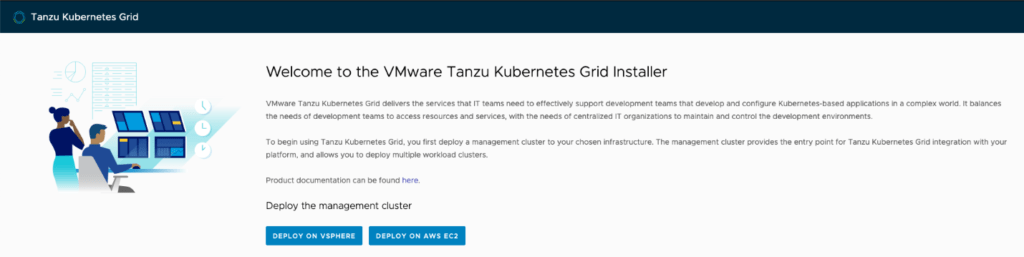

Using the TKG CLI to open a web app workspace used to gather inputs and kick-off deployment, there are options to deploy to AWS or vSphere.

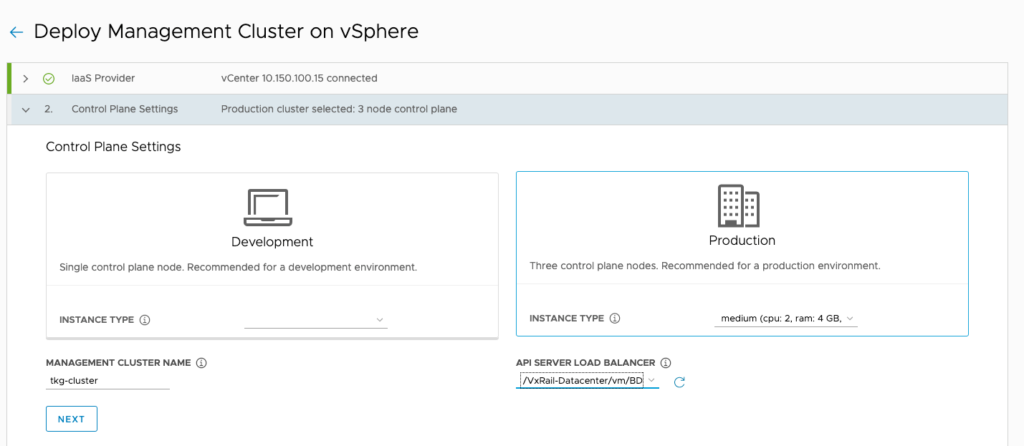

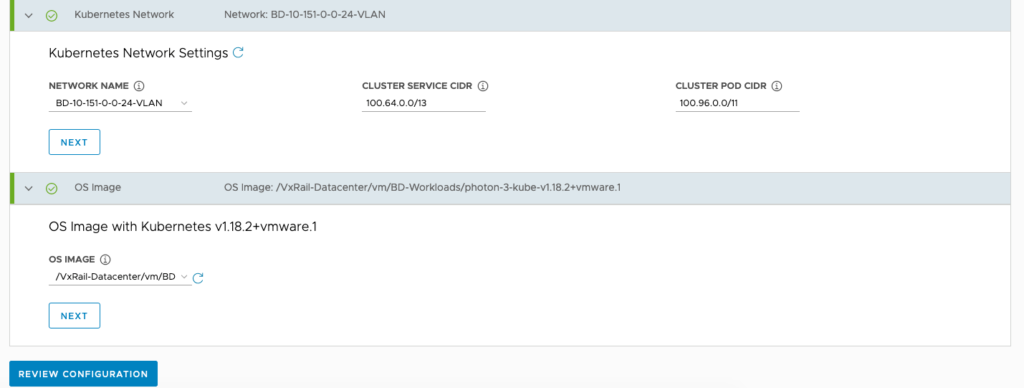

Figures 5 through 7 are examples of the sort of inputs required when deploying a Tanzu Kubernetes Grid management cluster to vSphere 6.7

Figure 5 – WebUI Generated from the TKG CLI ‘tkg init’ command

Figure 6 – Example Input for TKG deployment, selecting the control plane settings, and identifying the Templates uploaded to our environment as part of the prerequisites.

Figure 7 – Example input for TKG deployment, selecting the network attachments (vDS Port Group in this example)

Once the inputs are provided, the process is started.

The trick with ClusterAPI, and the TKG method of deployment, is how to create the initial Kubernetes cluster to then manage and deploy the subsequent clusters. It is a classic chicken and the egg scenario. If we are managing Kubernetes with Kubernetes and its turtles down, where does the original cluster come from?

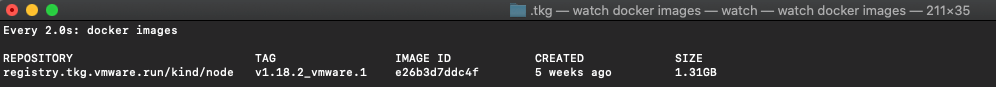

Here is where Docker Desktop, or the ability to deploy containers locally on your workstation, is important. The initial step for deploying a TKG environment is to deploy a Kubernetes-in-Docker container. This will use the local workspace to spawn a KIND (Kubernetes IN Docker) environment that will be the initial kick-off point for subsequent clusters.

Figure 8 – Example Docker Image pulled for KIND version 18 on the local workspace

![]()

Figure 9 – Example of KIND container running acting as the initial Kubernetes ClusterAPI cluster

From a high level, the TKG process will automate the deployment of a KIND Cluster locally, which will then be used to bootstrap the management cluster in our destination vSphere 6.7 environments. Once the destination environment is stood up, the KIND cluster will transfer roles and terminate, leaving us with a freshly installed Kubernetes management cluster in our destination platform (vsphere 6.7), as shown in Figure 10.

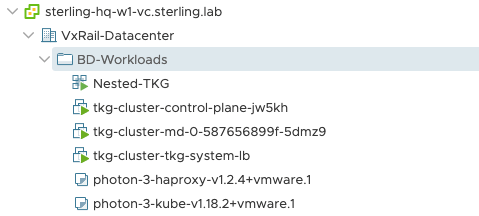

Figure 10 – TKG, ClusterAPI, Management Cluster deployed through TKG WebUI – One control, worker, and load balancer node

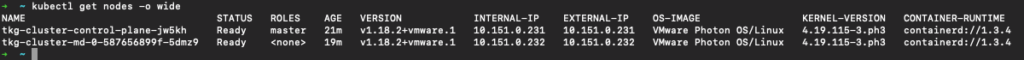

Once logged into the newly deployed cluster using kubectl, we can see the nodes deployed such as in Figure 11.

Figure 11 – TKG Management Cluster Nodes

Final Step:

- Deploy and verify workload clusters:

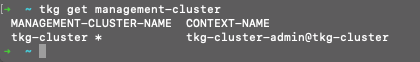

Through the TKG Deployment process, we have successfully deployed a management cluster into our vSphere 6.7 environment which we can now use to deploy and lifecycle additional clusters to run workloads.

Figure 12 – TKG CLI displaying a connection to our newly deployed TKG Management Cluster on vSphere 6.7

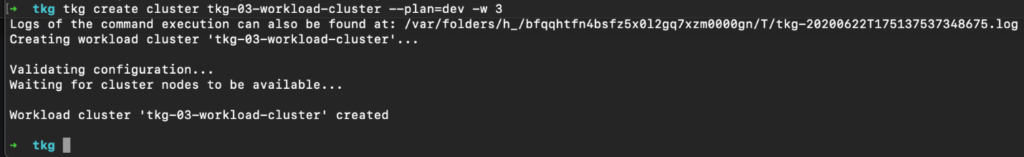

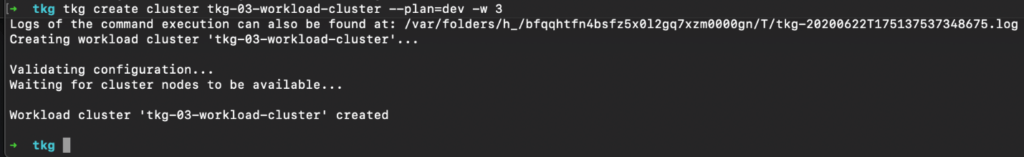

Here, we will use the standard TKG CLI to easily create an entire cluster by sending the manifest to the TKG management K8’s cluster, Figure 13, named tkg-03-workload cluster.

Figure 13 – Using the TKG CLI to deploy TKG guest (workload) clusters

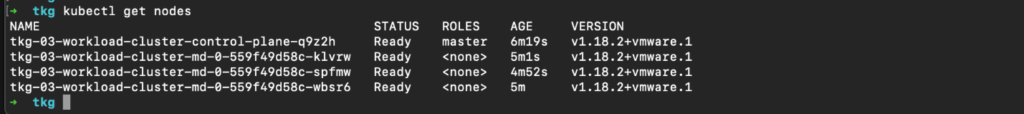

Figure 14 – Using Kubectl to interact with our newly deployed TKG guest (workload) cluster

Figure 14 – Using Kubectl to interact with our newly deployed TKG guest (workload) cluster

Results

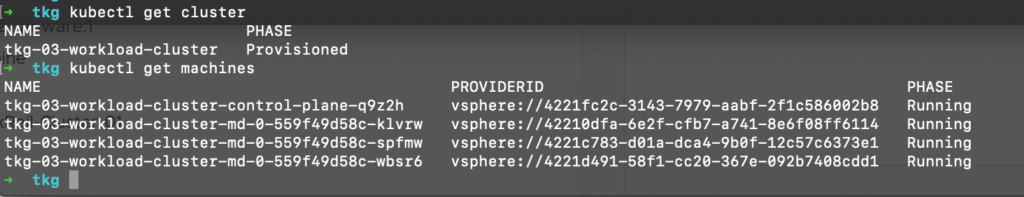

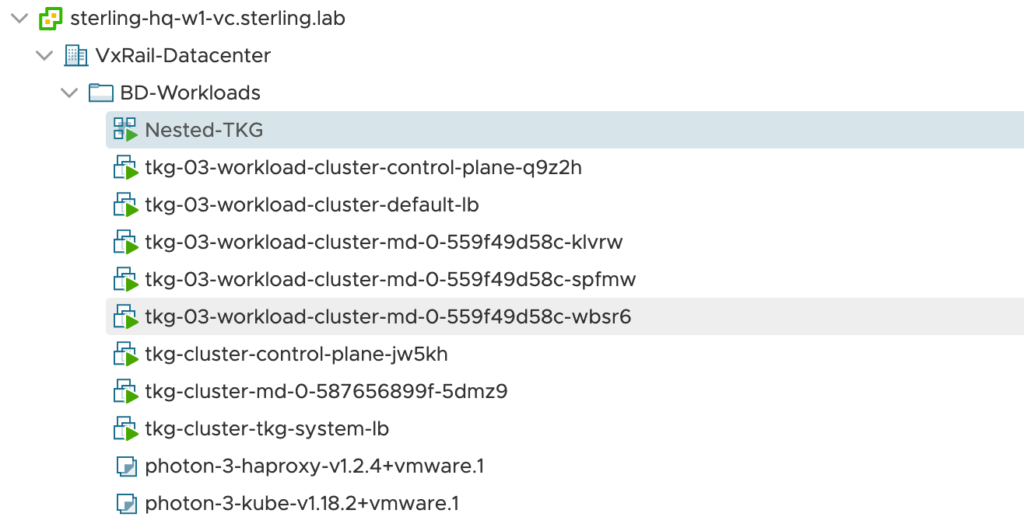

At this point, we’ve used Tanzu Kubernetes Grid to deploy a management Kubernetes cluster supporting all ClusterAPI custom resource definitions and control loops, in addition to using that management cluster to deploy multiple subsequent workload clusters ready for application loads, all on top of our existing vSphere 6.7u3 environment, Figure 15 and Figure 16.

Figure 15 – Example of viewing ClusterAPI CRD objects, Cluster and Machine

Figure 15 – Example of viewing ClusterAPI CRD objects, Cluster and Machine

Figure 16 – All machines deployed in our environment without interacting directly with the vSphere client

Summary

In summary, we have gone through what makes up a Tanzu Kubernetes Grid Cluster, the differences from vSphere 7 with Kubernetes, and successfully deployed a management and workload cluster to our already existing vSphere 6.7u3 environment. Overall, this affords us the ability to perform CRUD operations on Kubernetes clusters and provision them as needed without having to upend our existing environment. This is a tremendous use-case for those looking to begin migrating applications to container platforms while benefitting from the automated processes from VMware to build their Kubernetes foundation without having to upgrade vSphere.