Written by Billy Downing, Cloud Architect, Sterling

Overview

Several industries are exploring use-cases for deploying Kubernetes into their environment to take advantage of the efficiency, reliability, scalability, and mobility provided by applications deployed as containers within a robust orchestration system. However, deploying Kubernetes from scratch and following it with the myriad of tools and building blocks necessary to become a viable production solution, can be a steep learning curve. There are several solutions, with more emerging every day, to help alleviate the pain involved in deploying “Kubernetes, the Hard Way”; however, these don’t necessarily include the building blocks that your organization has already adopted and created business processes and organizations around.

Often, IT organizational structures are created around the technologies they’ve adopted and the need to manage them. It is very common to have dedicated teams working on their nuanced expertise with platforms that together create a coherent system for effectively running applications. In the world where DevOps is sought after, it is more apparent than ever that teams working closely together, rather than separated by silos, with a shared interest and focus on the application itself, instead of just their piece of the infrastructure, can rapidly improve the overall application experience for their consumers.

To this end, it makes sense to adopt a technology such as Kubernetes in a way that conforms with your organizational structure, while still providing the benefits expected from a cloud-native topology. For example, if your team is exploring migration paths for applications from virtual machine-based deployments to Kubernetes, but you are part of a traditional IT organization with siloed departments for compute, network, and storage, the path of least resistance is to adopt new technology in a way that it integrates with the existing infrastructure while still providing the benefits expected. If your virtualization team is running vSphere, while your network team is focused on Cisco solutions, and your storage team is using Dell PowerStore, then enabling vSphere with Tanzu coupled with Virtual Volume (vVol) integration into PowerStore, provides a Kubernetes platform that also supports the existing virtual-machine infrastructure of today without boiling the ocean.

Our intention is to explore a traditional data-center topology and how you can easily incorporate modern platform methodologies to enable self-service environments.

What Are the Components of the Solution?

Architecture

The goal for this environment is to provide a platform for which application owners can deploy virtual workloads alongside their container-based applications while affording them the ability to provision compute, storage, and networking at their own accord within the resource restrictions set by the operations administrators.

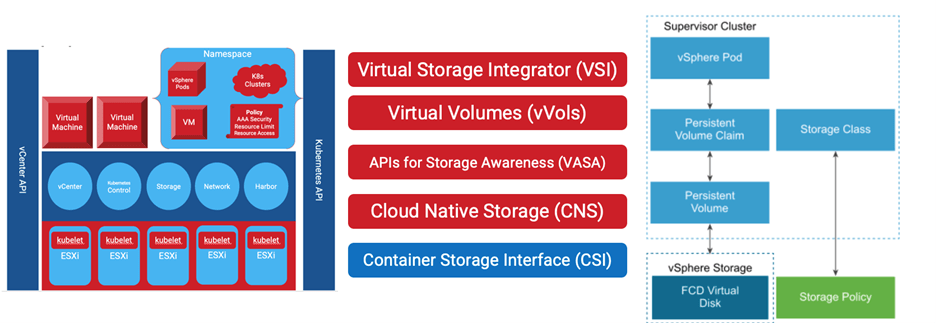

In a VMware environment you can realize this through the normal Virtual Volume (vVol) integration alongside enabling vSphere with Tanzu to present a Kubernetes API, tying everything together. ESXi hosts become Kubernetes data-plane nodes managed by a dedicated Supervisor control cluster, and PowerStore is integrated into vSphere through APIs for storage awareness (VASA) and Virtual Storage Integrator (VSI), resulting in a bidirectional understanding from compute to storage translated through standard Kubernetes storage classes and persistent-volume claims. If an application owner requires a stateful storage device to be provisioned using first-class disks, they simply request a persistent-volume claim attached to their workloads through Kubernetes, and the infrastructure handles the rest. Figure 1 is a visualization of the interconnection between our traditional compute environment and our storage platform, including tools such as Cloud Native Storage (CNS) and the Container Storage Interface (CSI) to tie this traditional environment into Kubernetes.

Figure 1: Components

Figure 1: Components

How Do These Platforms Work Together?

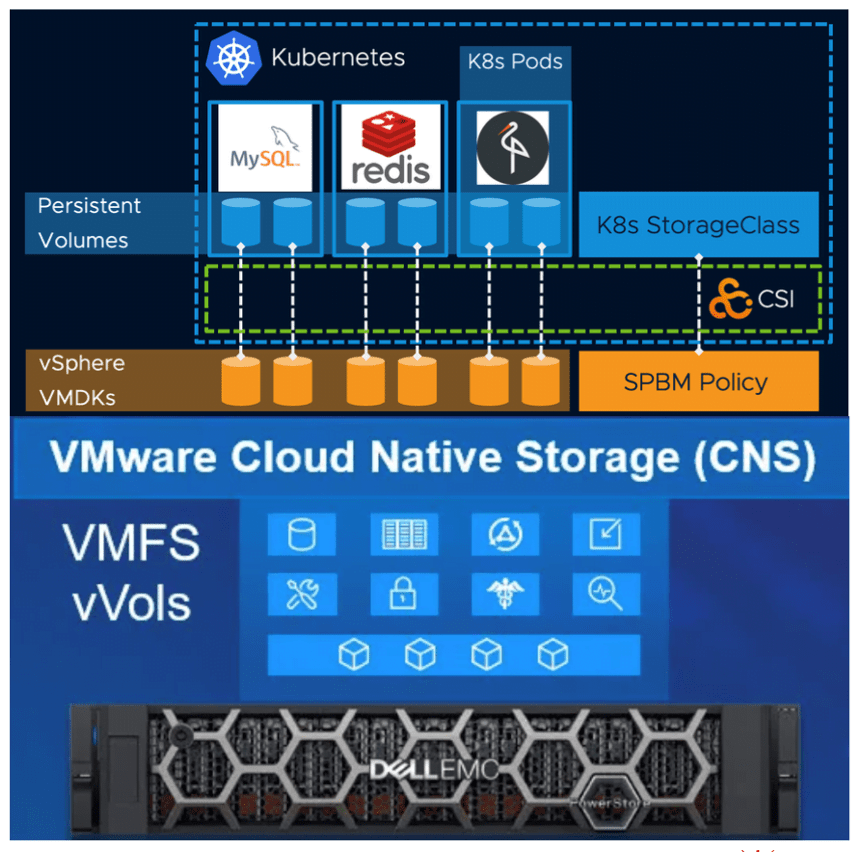

What we end up with is a commonly deployed converged architecture that supports not only our existing workloads but also our existing organizational processes, with the added benefit of an entirely new platform capability on top in Kubernetes. vSphere with Tanzu enables engineers and their pieces of the infrastructure to work closely together through programmatic interfaces and automation. Starting with storage admins, vCenter is given access to create dynamic containers on PowerStore. vCenter admins can then self-create storage policies and storage containers based on performance metrics, and then present them to the Kubernetes/Application admins through storage classes. Application owners are then given an isolated namespace environment where they can consume storage classes through persistent volume claims that then create a dedicated volume directly on the PowerStore. Overall, using a traditional environment as app admins, you’re able to self-provision all aspects of your application within the guardrails set by the operations teams as portrayed in Figure 2.

Figure 2: Example Cohesive Infrastructure Deployment

Figure 2: Example Cohesive Infrastructure Deployment

Why Would This Be Beneficial?

There are a few reasons to explore Kubernetes solutions that enable brownfield deployments.

Single Platform for Current and Future Workloads

- Using an existing platform, engineers can now begin deploying container workloads alongside virtual machines without disruption or addition of physical infrastructure. Sharing a platform for multiple application topologies also allows for a more efficient usage of resources, as well as a convergence-engineering skillset, since everything is done through the same interfaces.

Self-Service First-Class Storage

- Application owners, through the Kubernetes API, can dynamically create, attach, and lifecycle the stateful data within their workloads simply by programmatically requesting it via known Kubernetes objects. vSphere with Tanzu will translate the Kubernetes request into the appropriate API calls into the storage environment to ensure the desired state is achieved, relieving the application owners from manually requesting storage, and relieving storage admins from manually creating volumes.

Operations and Developer/App Owner Cohesion

- Context-aware, disparate environments such as Kubernetes, vSphere, and PowerStore provide a cohesive ecosystem where each solution can be handled independently and tuned to their specific efficiencies. Rather than servicing day-to-day operations requests, engineers can focus on higher level problems, with the comfort of knowing their infrastructure is dynamically provisioning itself on an as-needed basis under the strict policies you’ve created.

Adopting new technology and potentially shifting organization paradigms, as in the case of cloud-native technologies, can be difficult. Adding complexity by doing it all at the same time only increases the problems that might arise. By incorporating new technologies into an existing ecosystem, you can effectively migrate organizational shift independently of technology shift to ensure a smooth transition into a cloud-native world. Using the tools, platforms, and existing skills of vSphere with Tanzu coupled with Dell storage provides a smooth transitional environment within which to experiment and migrate workloads into a cloud-native, modern platform.