by Nathan Bennett

Having worked in vRealize automation for the past 5 years, one thing is for sure; nothing hurts as much as your blueprint or module being lost. Solutions utilizing maven to upload to GitHub never worked. So, you take a risk. Each day was spent hoping that nothing bad would happen to code and projects. When backup solutions came, it was a lifesaver from a catastrophe. As engineers, developers, and IT professionals, code has been a source of great joy and great pain. The joys come when everything worked, like a kid with a string of dominoes falling all in place, the great happiness that resulted makes it all worthwhile. The opposite is true as well. When that perfect plan falls apart, or worse, disappears, the pain is great.

Let us take a step back from major solutions and talk about a single source of truth. This is normally a term that points to a repository of code. The Source control manager is used to push or pull code to/from that repository. Seeming like a tedious task, and admittedly, sometimes wars will occur as to which code gets overwritten by which. This all melts down to the term GIT. The namesake is based on GIT-SCM, which is the de-facto solution for source control management. Utilizing GIT-SCM offers the ability to connect to a repository, whether public or private to push your code. This is not that repository. GIT continues to add more complication, as it can refer to the two biggest repository solutions, GIThub, and GITlab. One is not better than the other, and both can be utilized for holding your code.

Why is this important? Below are a couple of points as to why you would want to use a single source of truth (SSOT).

You can save your work.

This is the baseline as to why. Saving code or scripts in a local server is fine until someone destroys it, or ransomware attacks happen. It is like a gut punch when a script disappears, and you do not know where it went or why it has disappeared. Having a single source of truth, or repository, saves you from these moments by keeping your code as a highly available solution that is accessible outside of just your machine. Using your Code IDE (big fan of VScode), you can utilize GIT-SCM to push your code using the basic, ADD > COMMIT > PUSH steps. This is key, as you add the file you want to send to the repository. Then, COMMIT your code with a message (use that -m switch). Finally, the code base is pushed to the repository. Once everything is sourced into a repository, the question of what happens when switching machines arises. How do you access that code you just pushed? The answer is the Clone action that pulls the repository to the machine you want to work on. Using GIT-SCM will make your source of truth much more powerful. Here, your code is saved in a repository. Because of that, you are now able to push-pull to any machine you are working on.

You can also do the same thing for an application in a production machine in your environment. With about 3 lines of code, GIT can be installed, a repository cloned into your production machine, and applications installed as needed. This takes your source of truth to the next level. The ability to maintain your code, back it up, and then use it for automation into your fleet of machines is powerful. From the automation standpoint, this also maintains as you continue pushing your code. Setting those lines of code to a specific branch that will only be pulled until you want it to be updated, or you can set the automation to always pull the current version. The automation scripts never change, and your fleet is always current upon deployment.

You can update code using the CI tool.

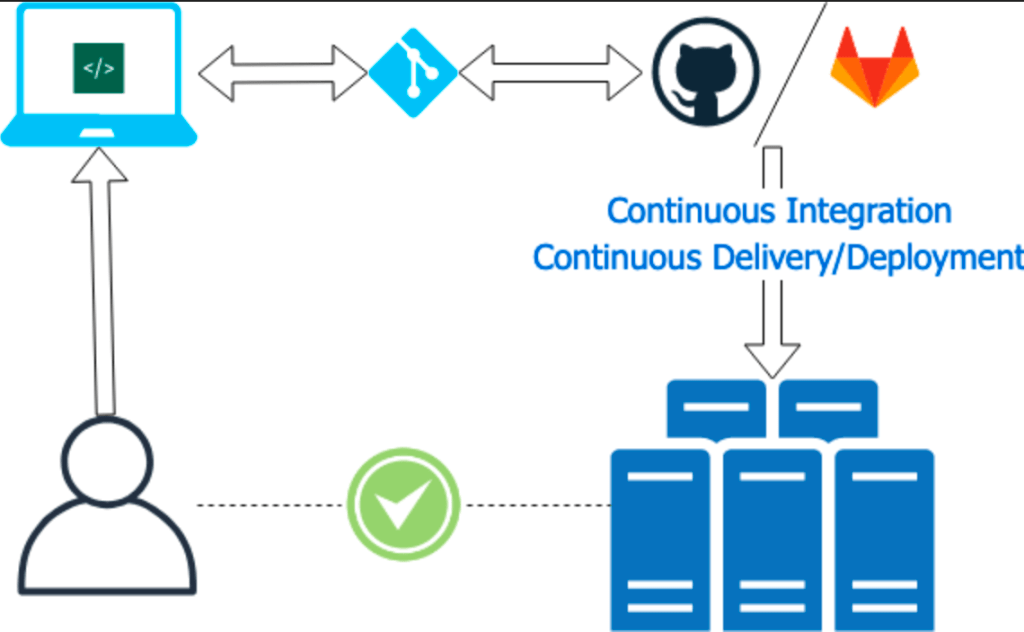

CI is a term tossed around a lot. CI stands for “Continuous Integration,” a toolset that takes your codebase in a single source of truth and pushes it to your application fleet simultaneously as updates are pushed out to your devices. To some, this sounds drastic and scary, which is why CI governance is key. This is where pull requests are used to put in a code statement saying, “My code is ready for production”. Then, a council advisory board (CAB) can look at that code base and determine whether it looks good or not. CI will also allow you to stipulate the environments that the code is sent to. Using a CI tool, you can verify your code in a test or development environment before pushing that code into production. We will take a deeper dive into this process in a later blog.

Figure 1. What is commonly referred to as “DevOps” in illustration

Next Steps – Containers

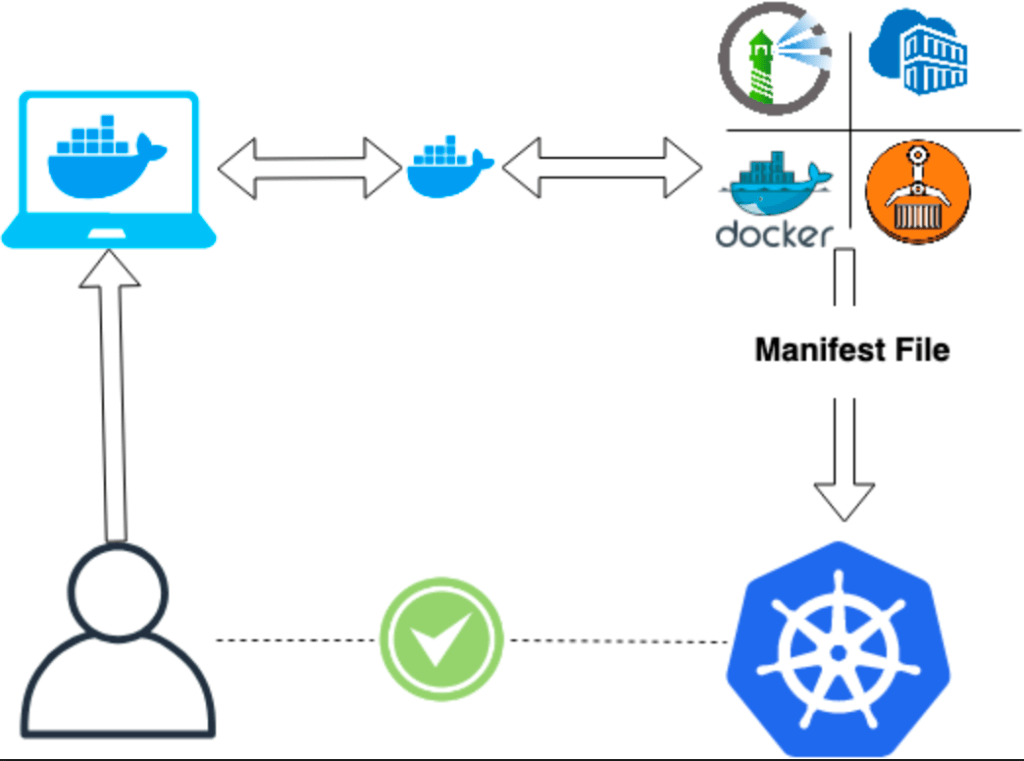

Single Source of Truth is exceptional from a codebase example. Containers take this to the next level. Once you are familiar with CI and your repository, you may start playing around in the container workspaces, utilizing Registries. These are akin to repositories except instead of it being code-based information, you are storing an entire configuration for your application to be deployed into a container. When you version your code into a repository, your images will version into registries. This leads to the same CI solution, where the code is then pushed to production not as bits in code, but as whole images. This process is made even more powerful with an orchestration layer within your container infrastructure. This is where Kubernetes comes in. As many of you are aware, Kubernetes is the darling of the IT space. Not because of the power behind a box, but because of the automation/orchestration on containers. In previous instances, you would set your image solution, run commands to deploy your containers, set the connection with other containers, and then maintain the solution. It was incredibly tedious and time-consuming. With Kubernetes, it orchestrates much of those tasks and allows you to send one piece of code declaratively. Kubernetes knows exactly how to deploy the solution in the best way.

Circling back to registries and repositories. With an orchestration layer that knows the version currently running, you can have your CI deploy the next version of your container from your image registry into your production cluster. Kubernetes recognizes it as a new version coming in. The connection for users will be maintained to the old version while the new version is spinning up. Once available, Kubernetes will move the connection to the new version. This is extremely powerful, as downtime during code updates is a pain point for most businesses.

Figure 2. Creation of container images to a Kubernetes cluster

What does this all mean? Getting started using source control is powerful in today’s IT world, not as in an explored world where codebase that is stored in a centralized, highly available location, isn’t a luxury, but a necessity. Learning how to interact with that codebase holds the same necessity. Honestly, some days require many hours getting code pushed correctly, or with different complications within the IDE working properly with the code. The upfront time spent is worth it. For every two to three hours spent saving work in a repository, there is a return on investment ten times over due to time saved. Time will be saved not only in pushing code to other machines, pushing it into an application but maintaining code in an unexpected or disastrous event.

Just as source code is maintained in a repository, the full deployment image is also maintained in a registry. You may then deploy from that registry. In conclusion, utilizing CI, Repositories and Registries is worth your time and effort, and more importantly, your peace of mind.